Description

Models are mathematical representations of mechanisms that govern natural phenomena that are not fully recognized, controlled, or understood. Mathematical modeling has become an indispensable tool via computerized decision support systems for policy makers and researchers to provide ways to express the scientific knowledge, to lead to new discoveries, and/or to challenge old dogmas. The notion that all models are wrong but some are useful is bothersome at first sight.

"...Most important, and most difficult to learn, systems thinking requires understanding that all models are wrong and humility about the limitations of our knowledge. Such humility is essential in creating an environment in which we can learn about the complex systems in which we are embedded and work effectively to create the world we truly desire." (Sterman, 2002)

The modeling process encompasses several steps that begin with a clear statement of the objectives of the model, assumptions about the model boundaries, appropriateness of the available data, design of the model structure, evaluation of the simulations, and providing feedback for recommendations and redesign processes. Model testing is commonly used to prove the rightness of a model and the tests are typically presented as evidences to promote its acceptance and usability ( Sterman, 2002). However, the understanding and acceptance of the wrongness and weaknesses of a model strengthens the modeling process, making it more resilient and powerful in all aspects during the development, evaluation, and revision phases. Rather than ignoring the fact that a model may fail, design evaluations to identify and incorporate the failures of a model to strengthen the learning process and exploit the principles in developing an improved redesigned model. In systems thinking, the understanding that models are wrong and acceptance of the limitations of our knowledge is essential in creating an environment in which we can learn about the complexity of systems (Sterman, 2002).

When (developing and) evaluating a model, one should incorporate important variables despite the foreseeable confines of our scientific knowledge and current modeling techniques. The, adequate tests of the model should be designed to evaluate the model and identify weaknesses that need to be addressed. Adequate statistical analysis is an indispensable step especially for predictive models (Tedeschi, 2006).

The MES was developed to assist on the adequate evaluation of mathematical models using statistical analysis, including linear regression analysis, mean square error of the prediction, concordance correlation coefficient, distribution analysis, deviation analysis, graphics and histogram, robust statistics, etc.

Download

|

Visual Basic 6 (SP6) |

|

32 bit and 64 bit Compatible |

|

|

The MES is programmed in Microsoft Visual Basic 6.0 and it works with most IBM-PC compatible computers that have Microsoft Windows XP or later. |

|||

The current version of the Model Evaluation System is Loading...

Note that upgrading to this version may require uninstalling an earlier version.

Note that upgrading to this version may require uninstalling an earlier version.

What is new in MES versions later than 3.1 ? NEW

- Model Comparison System (MCS). The MCS allows users to simultaneously compare the adequacy of different models when predicting the same observed values.

- Connection to R (Click here to download R). Both MES and MCS can execute the powerful script language of R to perform additional analysis and produce high quality graphics; users can create their own R code.

- New calculations were added to MES and MCS, including Akaike's Information Criterion, deviation analysis.

- New calculations for robust statistics, including Sn and Qn.

- Auto-update to automatically check for updates on the web.

Previous versions can be downloaded from here.

Registration

The MES will expire after 10 trial uses if it is not registered by the end of the grace period. You may register your copy by submitting the license number on the Register webpage. If applicable, registration codes are issued only after the full registration fee has been paid on the Purchase webpage.

Developers

|

Dr. Luis O. Tedeschi

|

Support

The following list summarizes corrections, enhancements, and functional improvements made to the software, presented in chronological order (newest to oldest). Each entry reflects updates implemented to improve stability, usability, and overall performance.

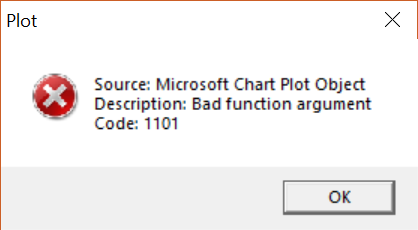

February 25, 2018. Error 1101 when trying to change background color of the plot. This is a known problem with Microsoft Chart ActiveX Control (MSChart 2.0.OCX). This bug was introduced with the update of Visual Basic 6 released by Microsoft that updated the MSChart 2.0 (OCX) to version 6.1.98.16. The previous MSChart 2.0 version 6.0.88.4 did not have this problem. Download and unzip the mschart20.zip, and copy the mschart20.ocx to the Windows\SysWOW64 folder. Alternatively, delete the mschart20.ocx from the Windows\SysWOW64 folder and repair MES.

October 15, 2004. Presentation showing the calculations of the MES and how to interpret them. Tedeschi, L. O. 2004. Assessment of the Adequacy of Mathematical Models. Page 49 p. in Workshop on Model Evaluation, Sassari, Italy.

Additional references are listed in the Publications web page.

Links

Software for model development

- ACSL by AEgis Technologies

- AnyLogic by XJ Technologies

- EcosimPro® for Windows by EA International

- Extend by Imagine That, Inc

- Powersim by Powersim Software AS

- ProModel by ProModel, Inc

- Simile by Simulistics

- System Modeler by Wolfram

- Stella/iThink by High Performance Systems

- Vensim by Ventana Systems, Inc

- VisSim by Visual Solutions, Inc

Software for model evaluation

- PEPI: computer programs for epidemiologic analyses

- IRENE: Integrated Resources for Evaluating Numerical Estimates

- SESDYN: Socio-Economic System Dynamics

Other modeling resources

- Modeling and simulation resources by Andrea E. Rizzoli

- Ecological models by John F. Weishampel

- Textbooks on ecological modeling by Michael Knorrenschild

- Model Evaluation by Saed Sayad